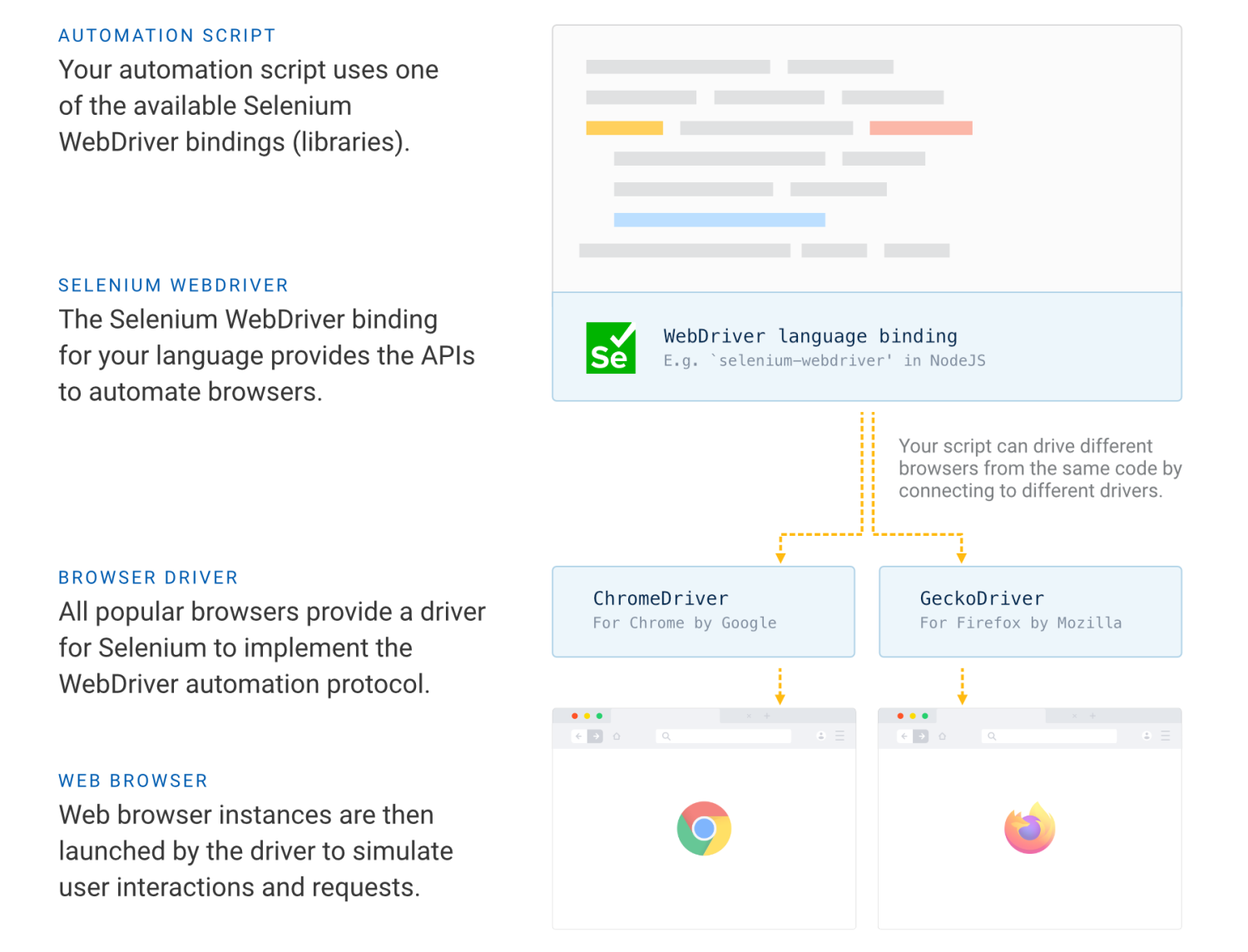

Let's review the important bits of our first script step by step. We start by importing the relevant exports of the selenium-webdriver package first (line 2). We then connect to the service provided by the selenium docker container and request a new web browser session and launch a Chrome instance inside the container (lines 4-11). We need to specify the browser name here ('chrome'), as Selenium can also connect to services that support multiple browsers and different versions (we will look at this later).

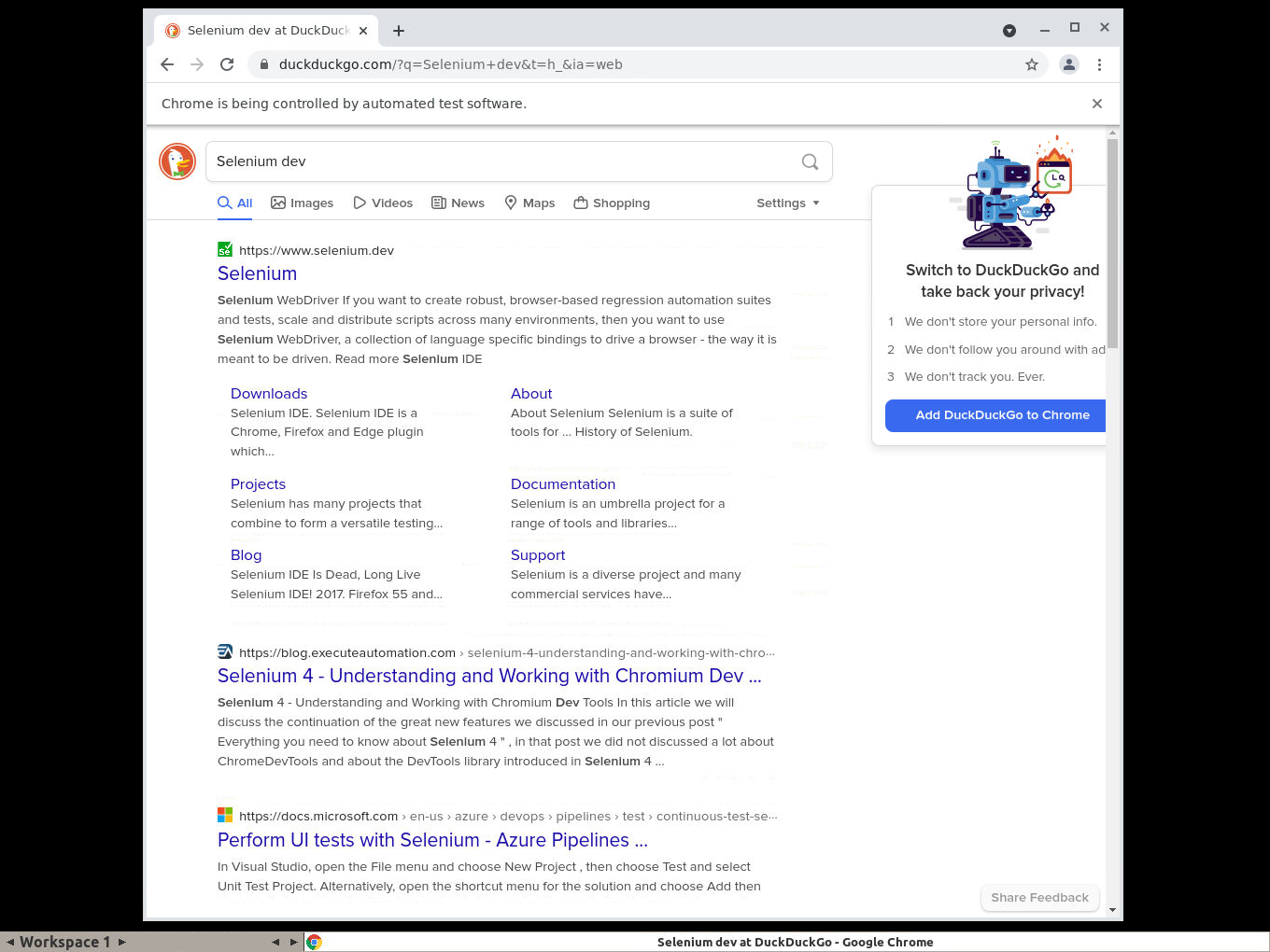

Our automation script tells the browser driver to open the DuckDuckGo search website (line 15). By default, Selenium will wait for the page to fully load before continuing. So after the call to load the page, we find the search input field on the page, enter the search string Selenium dev, and instruct Selenium to simulate pressing the Enter key to submit the search form (lines 18-19). There are various ways to find elements on a page and interact with them, e.g. via the the ID, element name, CSS class, path etc. The Selenium documentation provides a good overview of supported methods:

After we submitted the search, we also need to wait for the result. If we just submitted the search form, the script might finish before we received the result, because Selenium (or the browser) wouldn't know what to wait for. So we need to find a way to wait for the search result. In our case we are waiting for an element on the result page with the ID links, as DuckDuckGo renders this element as part of the search results. So we use the driver.wait call to wait for the element on the page to be rendered as part of the search result (line 22). Selenium offers various ways to wait for elements and other changes on the page, and you can also write custom wait logic:

Last but not least we end the browser session by calling driver.quit (line 25). This is important to avoid leaving an incomplete browser session running in the container, which would prevent us from starting another one before it times out. It's a good idea to wrap it in try .. finally to ensure that the script always closes the browser, even if there was an error in our script.

We can simply start the script inside our container by using the node (Node.js JavaScript runtime) command. Make sure to connect to the VNC service (see above) so you can see the browser in action: