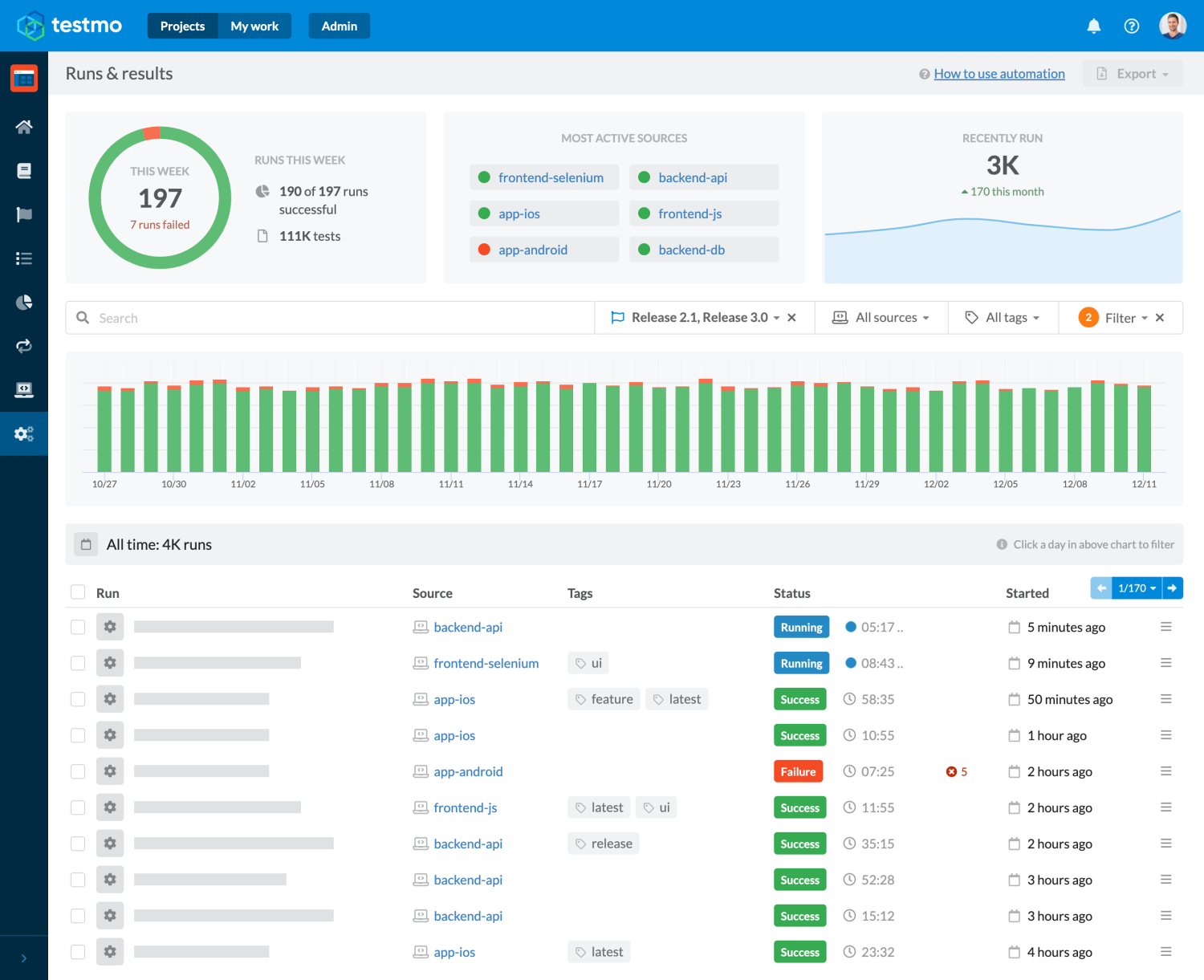

Bitbucket comes with powerful CI pipeline features to automatically build, test and deploy your projects. If you are using Bitbucket, then using the pipeline feature is a great way to test and verify your code and execute your automated tests whenever new code is committed.

It doesn't matter whether you are running your automated tests as part of your main project pipeline, or if you are using a separate project and repository to host and execute just your automated tests: adding your automated tests to your CI pipeline is a great way to ensure that your tests are run consistently & regularly and it enables your entire team to track the results. So let's get started!

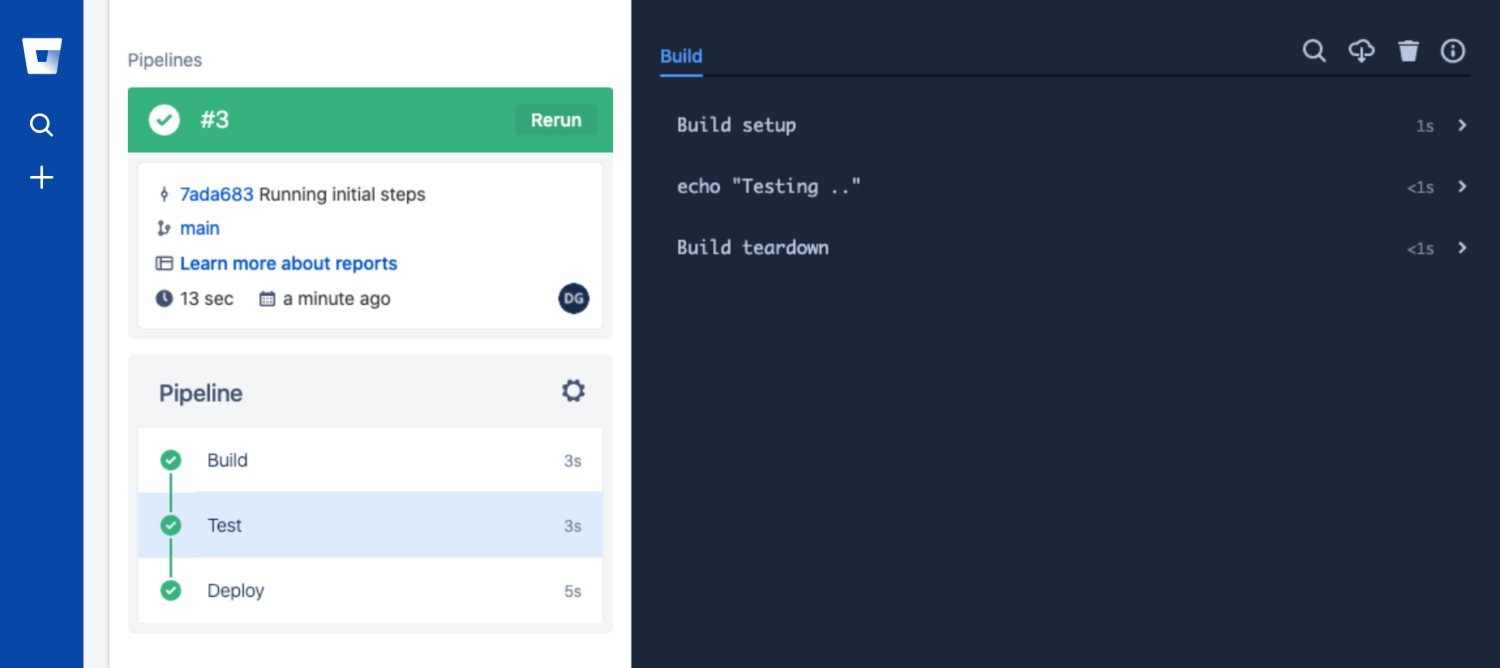

Initial Bitbucket Pipeline & Repository

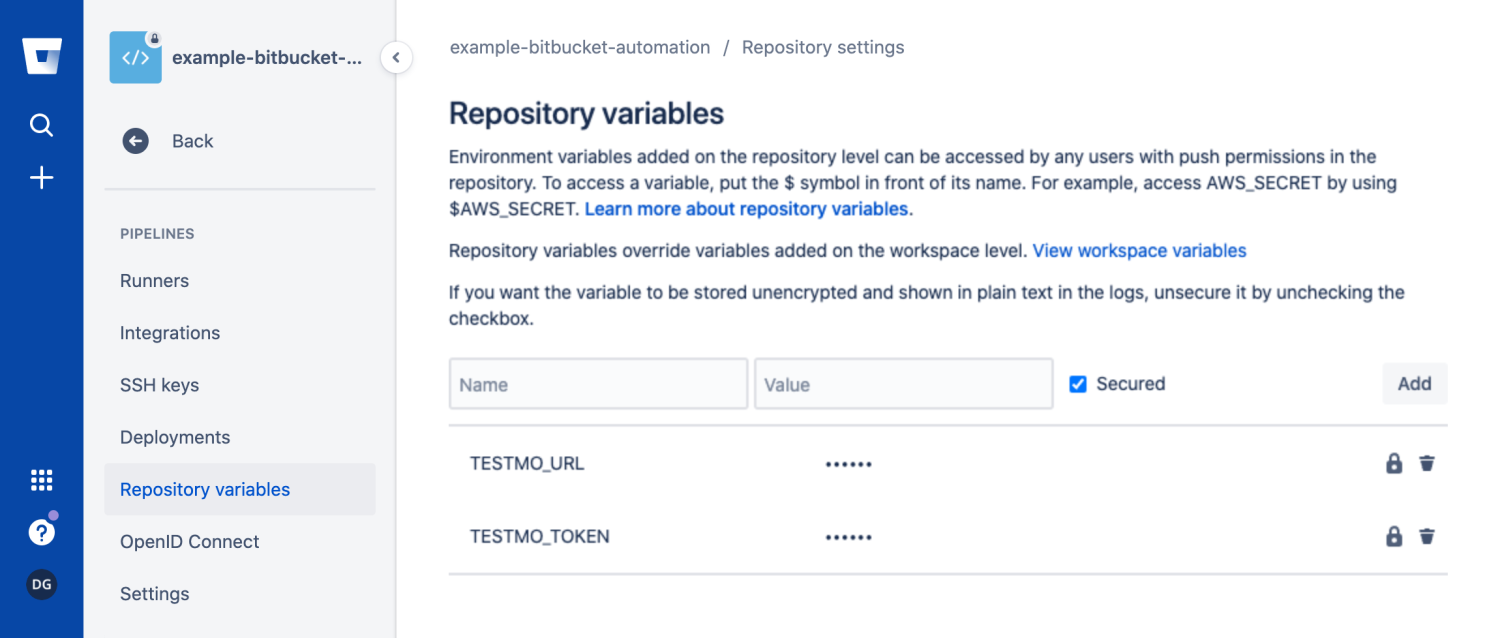

We first create a new repository in Bitbucket to host our code and pipeline for this article. We will call it bitbucket-example-automation, but you can name it anything you like. You can also find the project repository and code for this article on Bitbucket, so you can also review the full code there.

Next we are going to create our initial pipeline configuration. For this, simply add a new file called bitbucket-pipelines.yml in your repository. Bitbucket will always look for a file with this specific filename for the repository's pipeline configuration. Add the following basic pipeline to the file: