In this article we will look at setting up a modern CI workflow to run automated tests on CircleCI with Docker, including collecting and reporting test results. The setup explained here will work with practically any test automation tool, so you can easily adjust it to the frameworks and platforms you use.

Initial Git Repository & CI Config

We will start by creating a new Git repository on GitHub. If you are new to Git or GitHub, simply follow these instructions. We recommend getting familiar with and using the git command line tool if you don't use it already. For this article we are creating a repository called example-circleci-automation, but you can name it anything you like. We've also published this article's Git repository so you can always review the full files there.

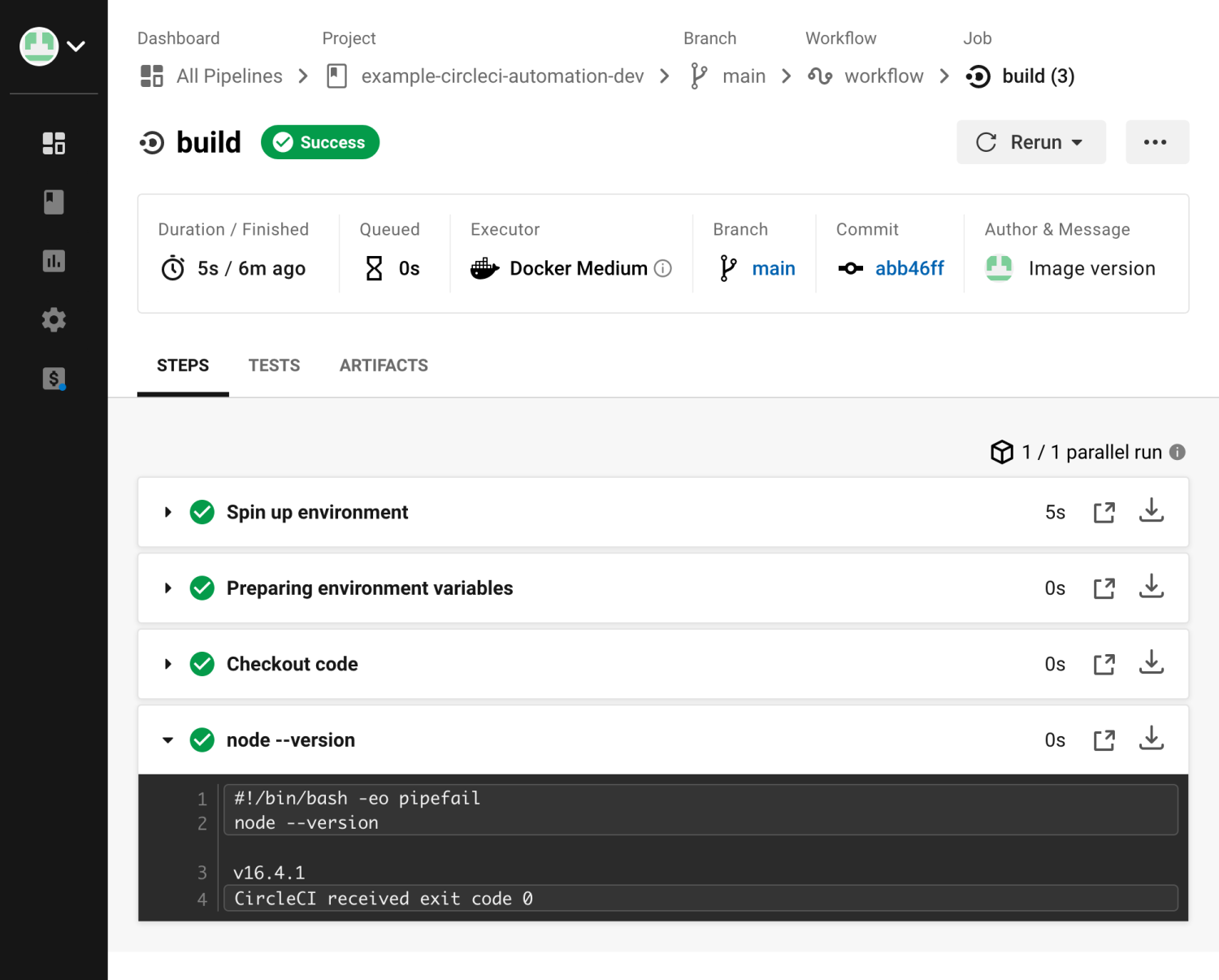

Next you will need to create a CircleCI account and link it to this new repository (in CircleCI, they call this following the project). The idea is that whenever you push a new version of your code to GitHub, CircleCI automatically starts a new CI build for projects you follow.

To start, we will just add a single file to our repository: the main CircleCI config file under .circleci/config.yml.