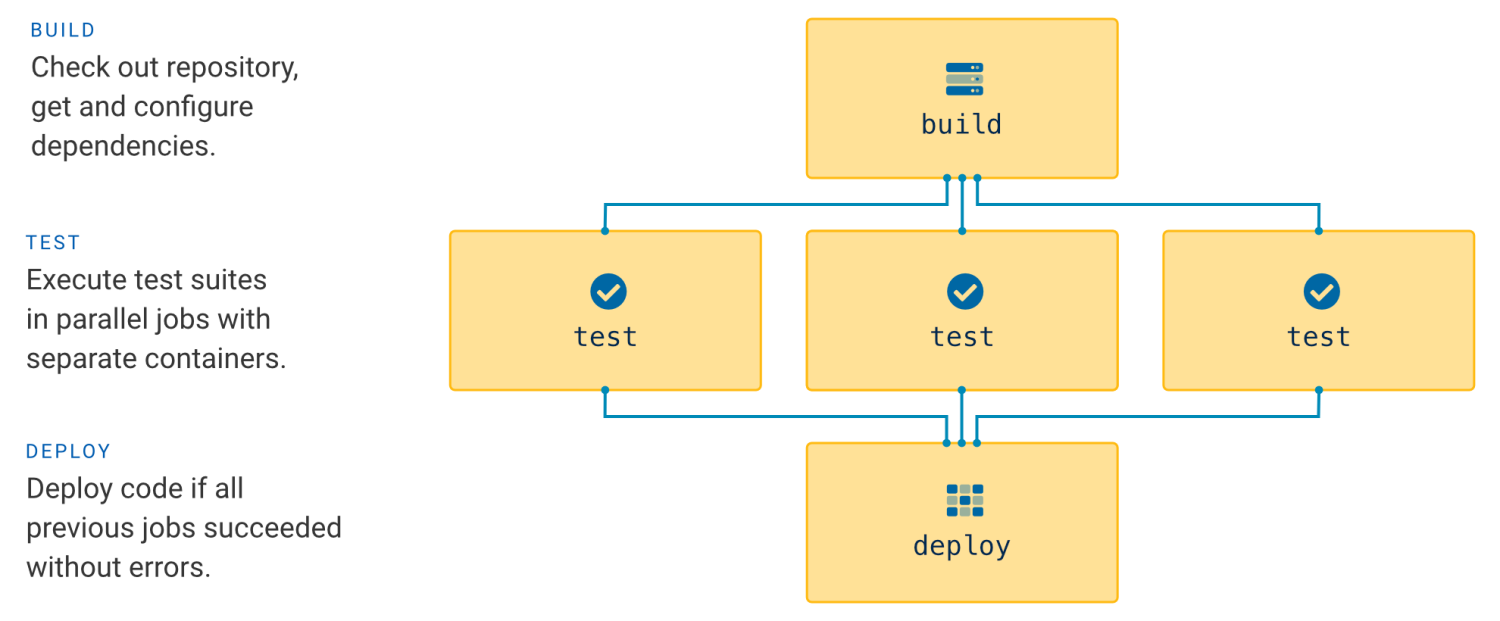

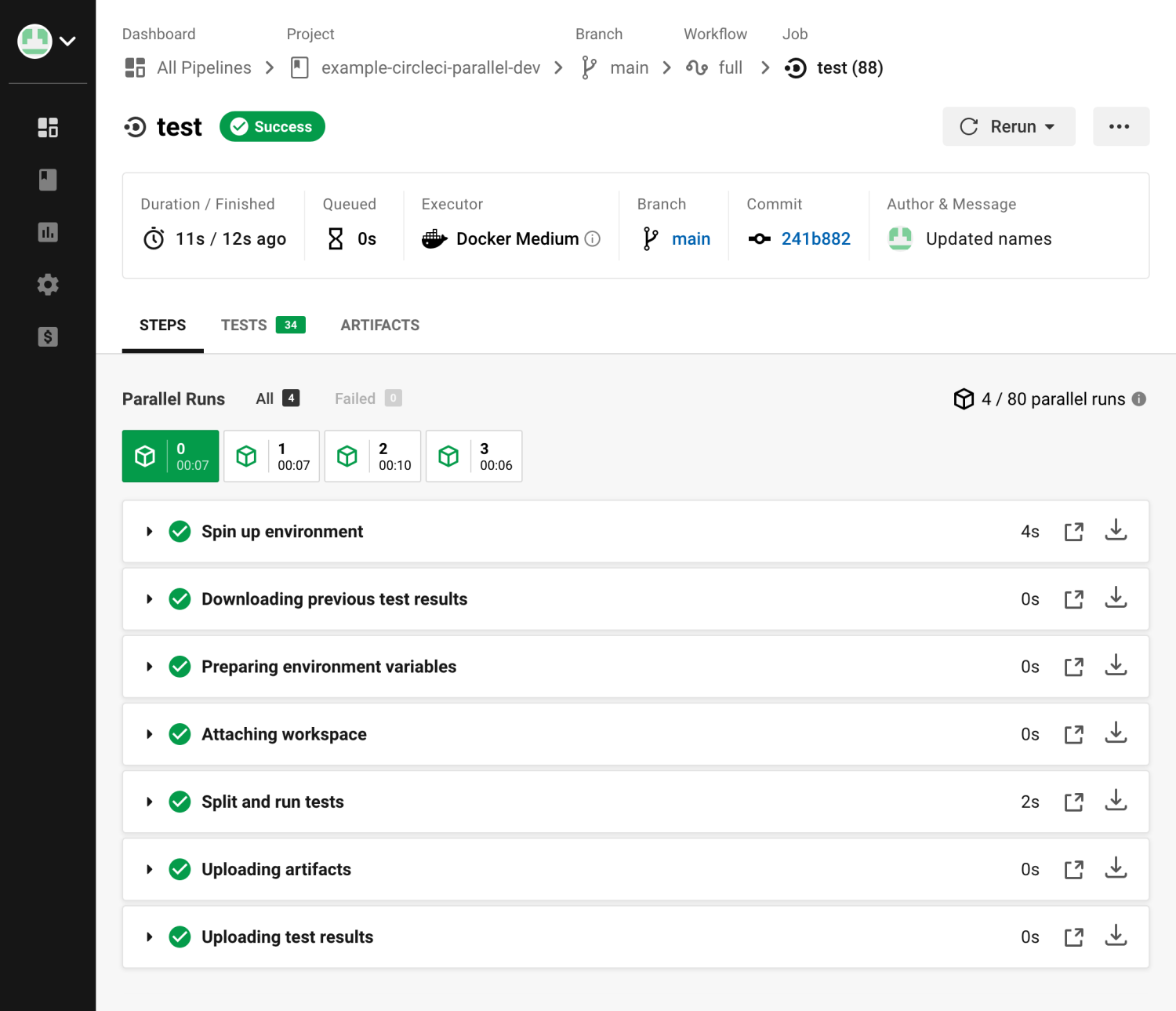

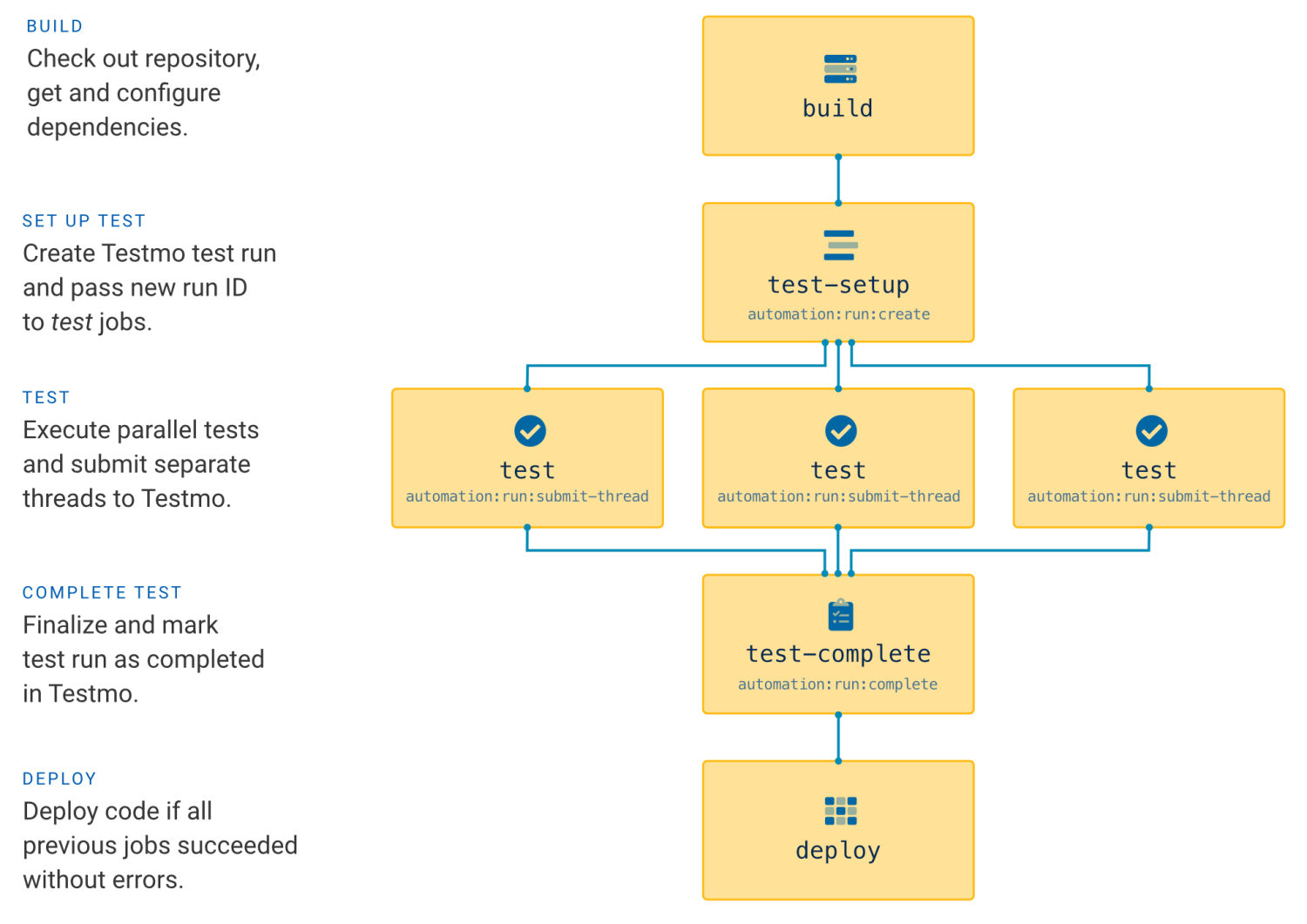

As your projects and the number of automated tests grow, running your entire test suite as part of your CircleCI workflow can take more and more time. This is problematic as it slows down feedback for your development team and makes deployments slower.

Fortunately, automated tests are usually easy to run in parallel, as each individual test should be executable independently of other tests. In this article we explain how to add concurrency to your CircleCI workflow and speed up the execution time of your automated tests in your CI pipeline.

If you are new to setting up test automation with a CI pipeline, we recommend starting with our introductory guide on test automation with CircleCI. Once you understand the basics of setting up the CI workflow, you can further optimize your test suites with parallel execution as outlined below.