Summary: There are few things more frustrating for a developer than having to wait for a long time for all tests to complete after pushing new code. In this guide we show step by step how to drastically reduce testing times with parallel testing.

Running your automated tests in parallel as part of your GitHub Actions CI pipeline is a great way to improve build times and provide faster feedback to your dev & QA teams. Not only does running your tests in parallel reduces wait times, it also allows you to release bug fixes and updates to production faster without having to compromise on the number of tests you run.

If you are new to running your automated tests with GitHub, we also recommend taking a look at our introduction guide on GitHub Actions test automation.

Parallel test automation execution with GitHub Actions is relatively straightforward, but there are a few configuration settings you need to understand to implement this. In this guide we will go through all the details to set up parallel test automation to speed up your builds, as well as report the results to test management. So let's get started!

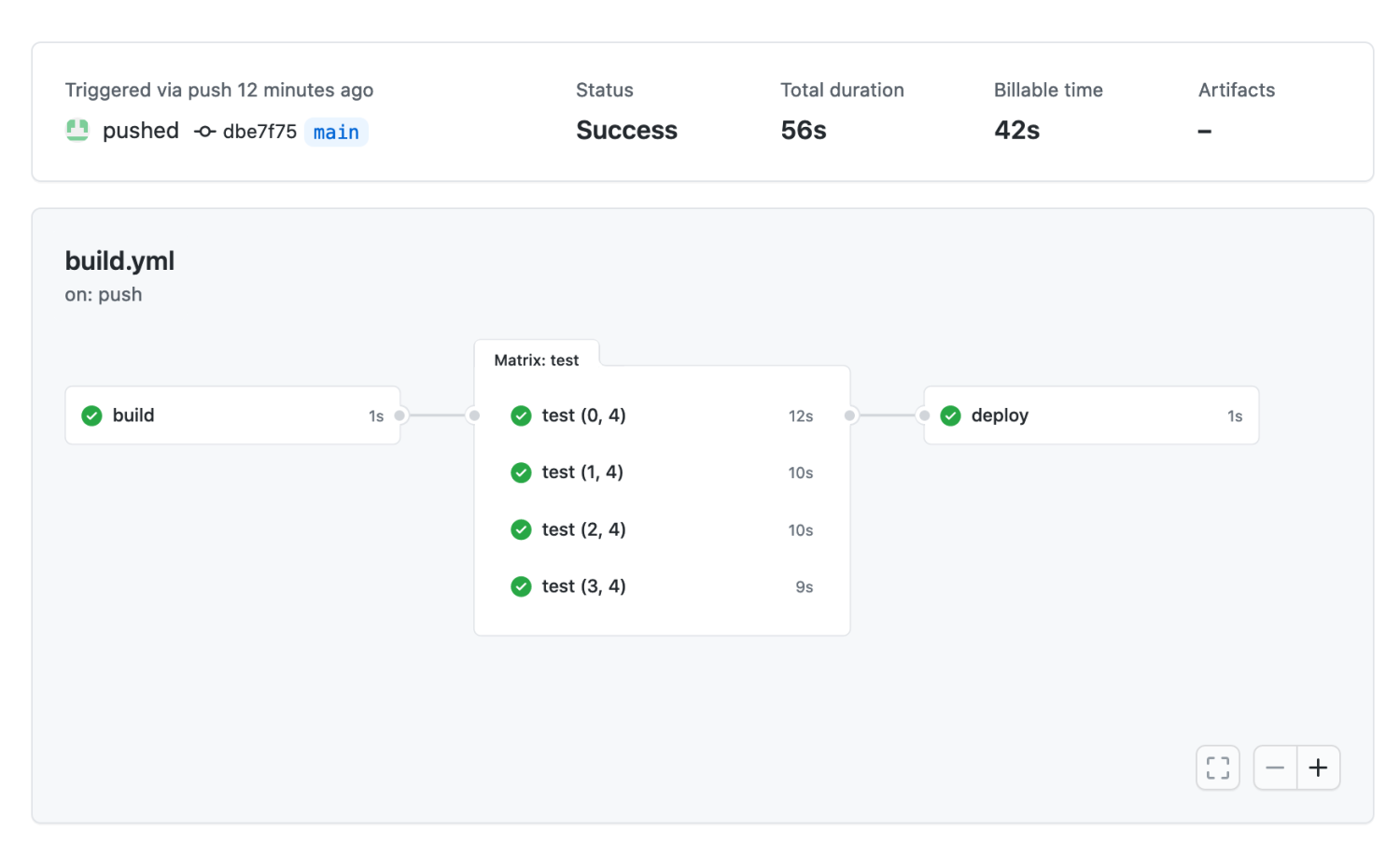

GitHub Actions Parallel Test Workflow

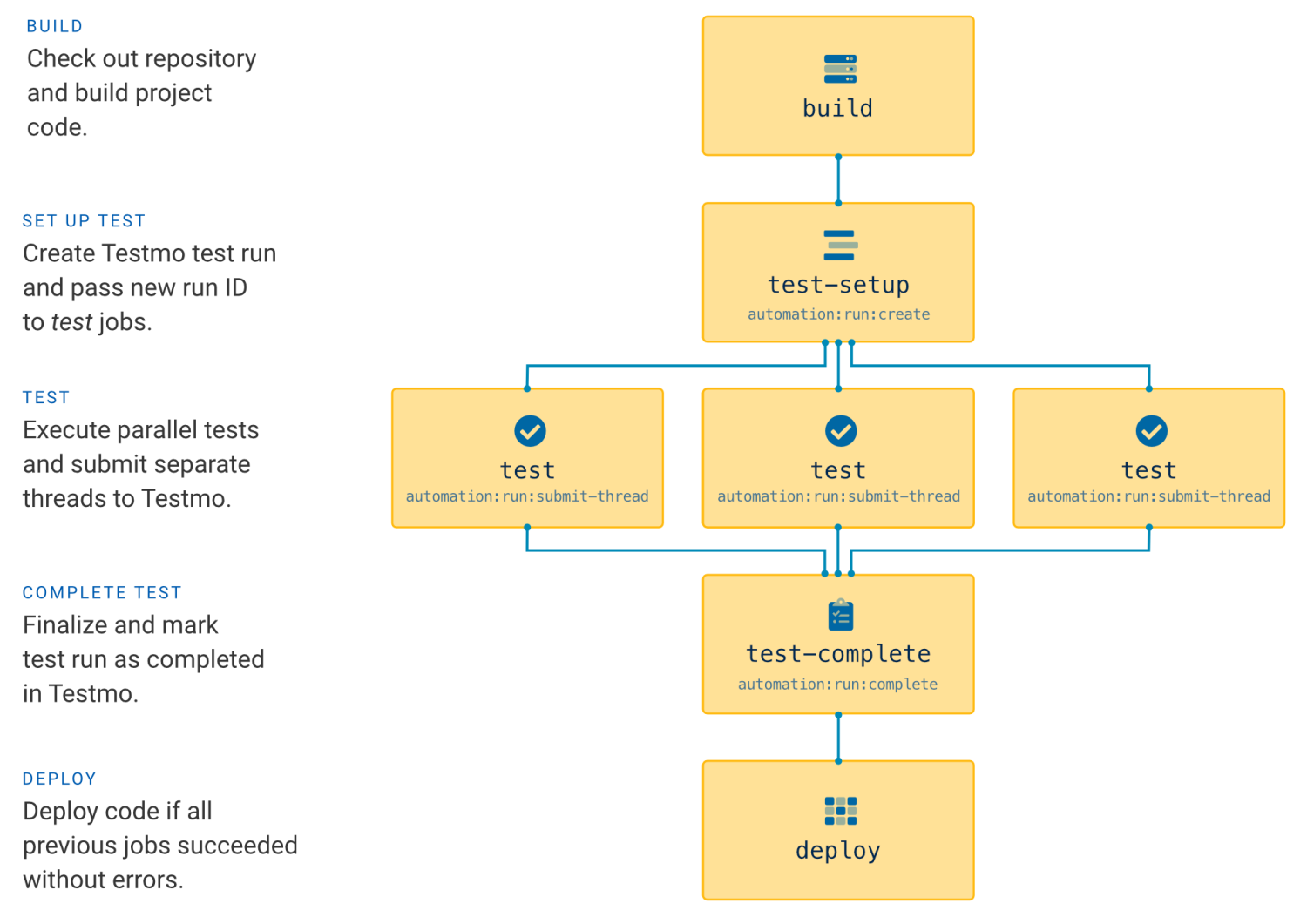

To run our tests in GitHub Actions in parallel to improve test times, we need to run multiple testing jobs at the same time. In each test job instance we then need to execute a different subset of our tests.

For our example project we will start by defining separate build, test and deploy jobs. Strictly speaking we wouldn't need the build and deploy jobs just for our example project, but we want to create a full example workflow so it's easier to use this for a real project. The build and deploy jobs will be empty in our example, but you can add any build preparation or deployment code to your workflow as needed.

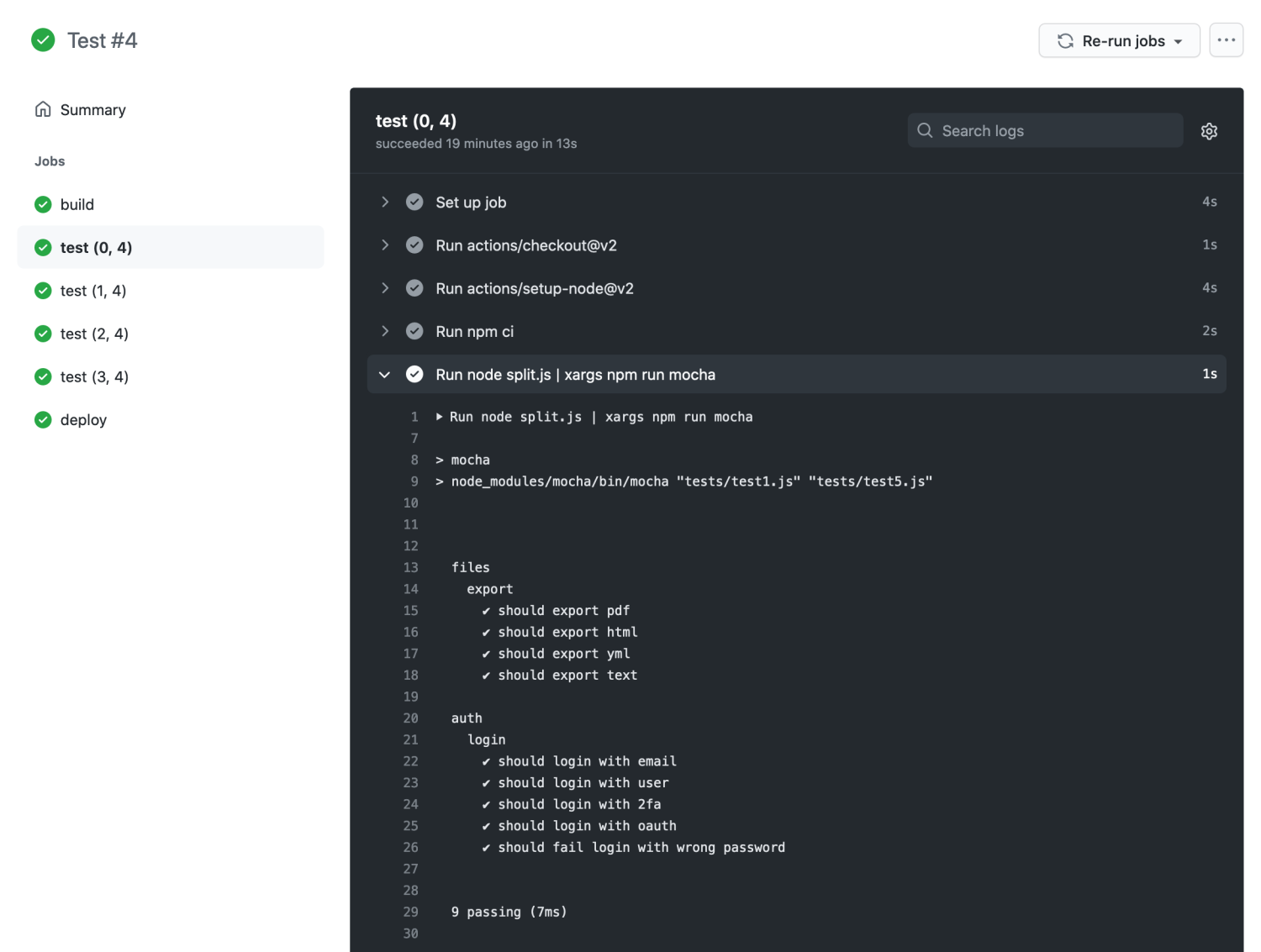

Our initial workflow will run the build job, followed by multiple parallel test jobs, and finally run deploy if all tests passed: