Bitbucket CI pipelines support parallel test automation jobs, which is a great way to improve build times and to provide faster feedback with test results to your dev & QA team. It can be frustrating for developers having to wait a long time for tests to complete when new code is pushed. Not only does running your tests in parallel reduces wait time, it also allows you to deploy bug fixes and updates to production faster without limiting the number of tests you can run.

If you haven't set up test automation with Bitbucket before, we also have an introductory article on Bitbucket CI test automation pipelines, which is a good way to get familiar with the basic concepts.

Executing your automated tests in parallel jobs in Bitbucket is quite straightforward and you just need to learn a few concepts and configuration options. In this guide we will go through all the details to get this up and running, as well as reporting our test results to test management. Let's get started!

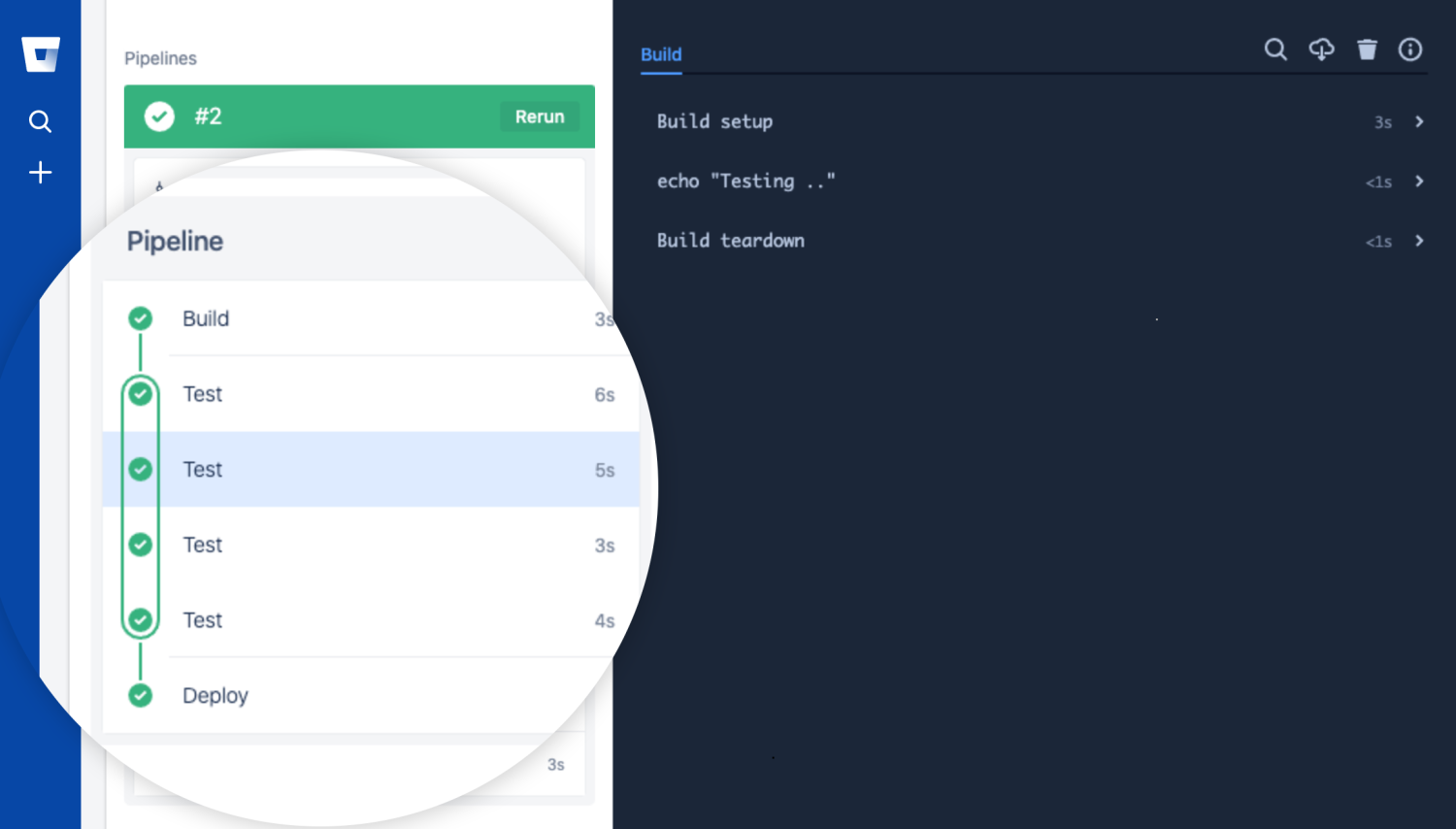

Bitbucket Pipelines Parallel Testing Workflow

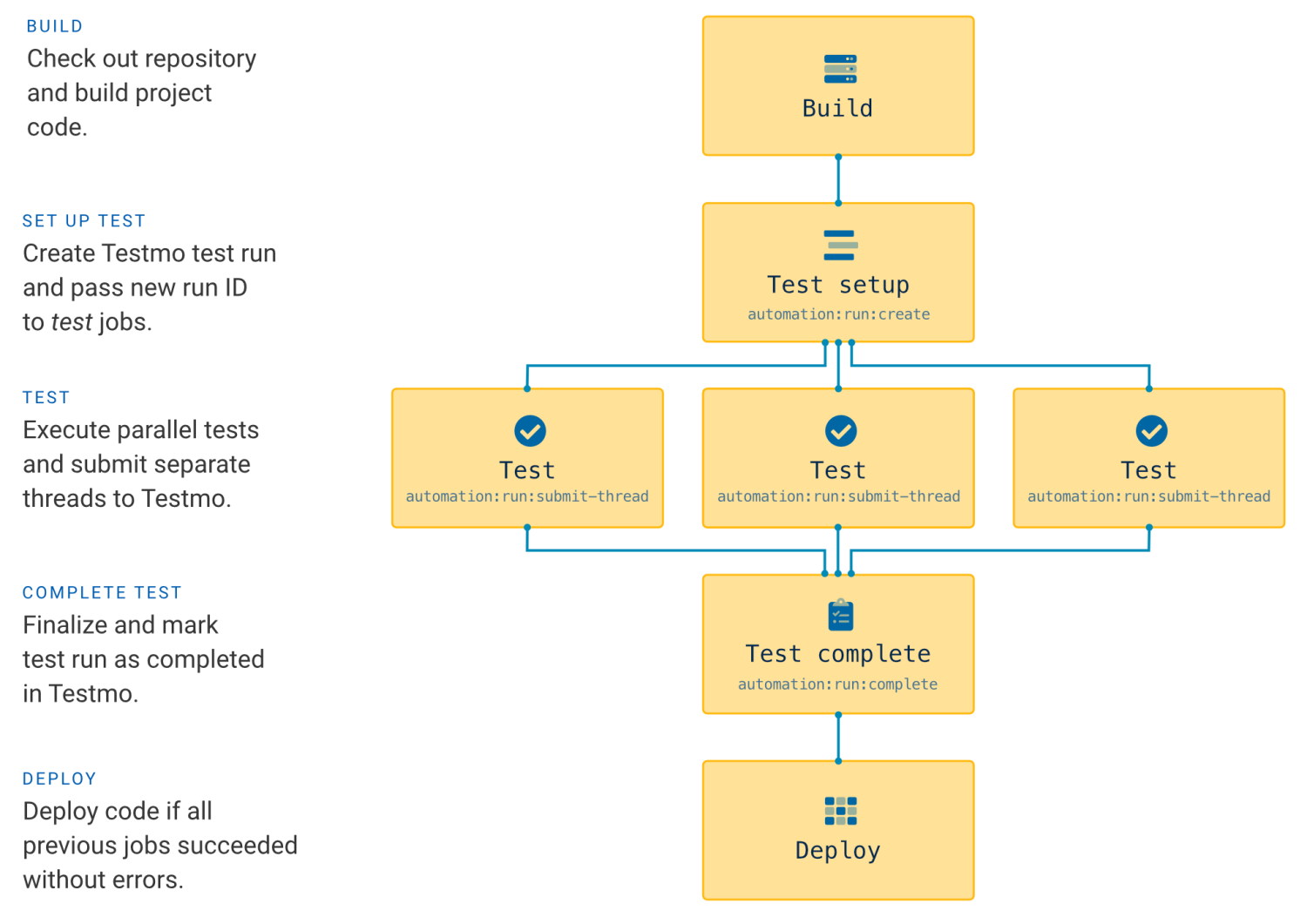

To run our automated tests in parallel, we need to tell Bitbucket to start multiple parallel test jobs at the same time. In each testing job we then run a subset of our automated tests to verify.

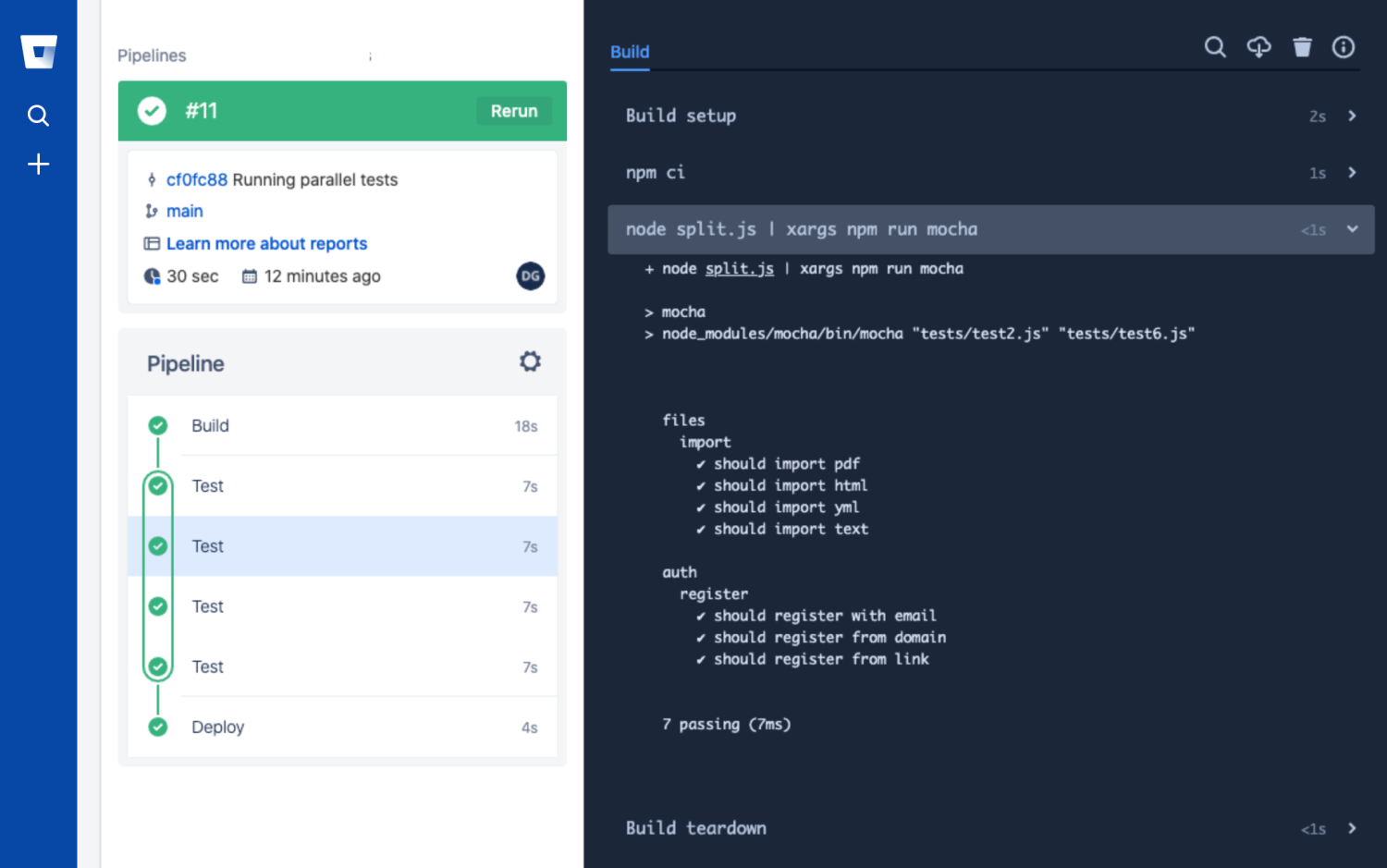

In our example project for this article we are adding separate Build, Test and Deploy steps to our Bitbucket pipeline. Our initial basic pipeline will run our Build step first, followed by multiple parallel Test jobs and then finally run Deploy if all tests in all our testing jobs pass (Bitbucket will not execute the Deploy step if any of our tests fail):